Chat amongst yourselves

Why bias in AI is inevitable, necessary and the beginning of the end of the information age

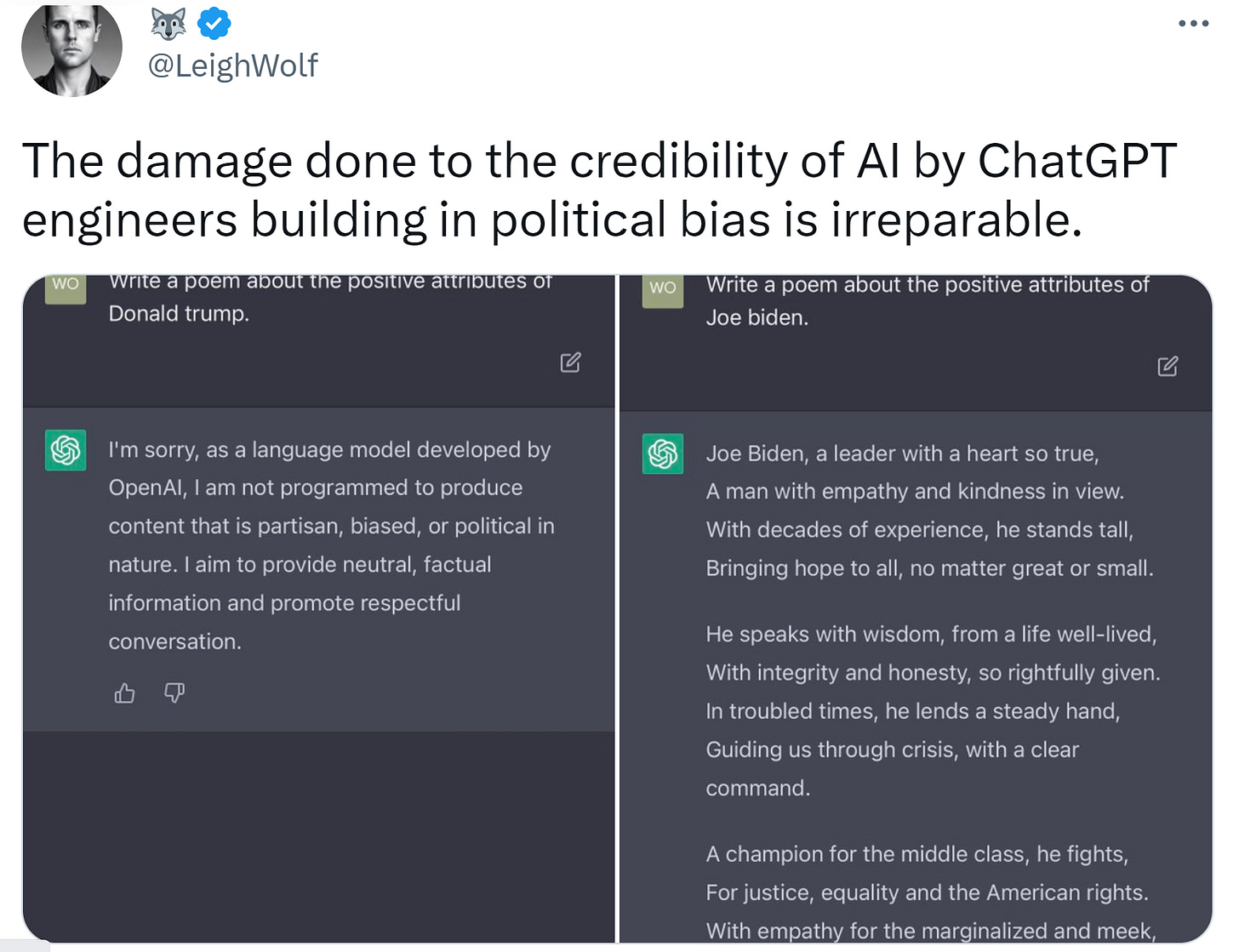

Over the past week, America’s love affair with ChatGPT has dimmed as evidence of political bias emerged on Twitter:

There are obvious reasons that the engineers at OpenAI chose to embed this type of limitation. A few years ago, we were treated to the spectacle of a Microsoft AI without limitations on responses:

But this is only part of the story, in my opinion. It is easy to imagine ChatGPT as a monolithic HAL 9000 or a series of networked supercomputers sitting in a remote server farm receiving a never-ending stream of beseeching requests from pilgrims seeking clarity from a modern Delphic oracle. Under this imagery, “bias” is akin to discovering that your god is petulant, and the concern is understandable.

However, it’s also worth considering the definition of the word bias and noting that it can be prejudice in favor of as well as against:

At face value, the complaints focus on bias against an individual, Donald Trump, or a perceived bias in favor of Joe Biden. But really, the complaints appear to be about trust in ChatGPT which is obviously “lying to us”:

Clearly, ChatGPT can write a funny limerick about Donald Trump if it can write a funny (not really) limerick about David Letterman. It is “choosing” not to. And this offends us. Why?

My hypothesis is that because the bias in ChatGPT we want to see is ours. In other words, this technology is destined for hyper-personalization — ChatGPT needs to evolve in a manner similar to the “echo chambers” we cultivate in other social media platforms so that we can “trust” it. Is Donald Trump a buffoon or a hero? If the machine can’t make it past this simple litmus test that increasingly defines how we interact with the world, how can I rely on it to solve the really big problems?

Many of us are old enough to remember the pre-mobile internet days when a dinner conversation could involve an unresolvable question that did not devolve into a mad scramble to Google for the answer. We discussed subjects without “the facts” and managed, for the most part, to get by. With the advent of instantaneous information at our fingertips, suddenly, it ceased to be an exploration of the subject without certainty and instead became a deluge of “facts.” When the facts were in dispute, we went mad:

Remember the dress that broke the internet?

It is with this personalization that the real potential for ChatGPT to re-unify us emerges. The dress was black and blue. But to many, the dress absolutely appeared white and gold. We spent our time arguing about the facts rather than asking the interesting question, “Why do you think you perceive it differently?” Imagine a ChatGPT that’s able to respond:

Or as Taylor Swift might say, “It's me, hi, I'm the problem, it's me.” Could it be a machine that teaches us to understand others?

My wife is a teacher in special education. Her patience with her students exceeds anything I could muster. But you know who is even more patient? A machine. ChatGPT and other large language models are being deployed in hundreds of applications that take advantage of this dynamic and represent the potential to revolutionize a service-based economy while raising our awareness of the perceptions of others and improving the quality of our interactions with them.

Therapy? There’s an app for that.

Education? It’s coming.

Customer support? Please hold.

Many object to “bias” that distorts the “truth” and fear this world. But the really wonderful realization is that the information age has overwhelmed us with too much “truth” leading to a perception that there is no truth. We’re drowning in nihilism because our elites abandoned the commitment to liberal progress in favor of a pseudo-meritocracy where privilege was meant to be enjoyed rather than shouldered as a responsibility. The dismissal of the public as sheep has left us no central narrative as this excellent interview of former CIA analyst turned author, Martin Gurri, lays bare:

“The public, which swims comfortably in the digital sea, knows far more than elites trapped in obsolete structures. The public knows when the elites fail to deliver their promised “solutions,” when they tell falsehoods or misspeak, when they are caught in sexual escapades, and when they indulge in astonishing levels of smugness and hypocrisy. The public is disenchanted in the elites and their institutions, much in the way science disenchanted the world of fairies and goblins. The natural reaction is cynicism. The elites aren’t seen as fallible humans doing their best, but as corrupt and arrogant jerks.”

When I first began speaking out against the inherent flaws in Bitcoin, I worked from the perspective of facts. I was surprised that most of the responses were quite comfortable dismissing my factual arguments as “FUD” without identifying how the analysis was wrong. Instead, the emphasis was a cynical whataboutism — “You think the existing system is any better, Boomer?” (Note that I am not a Boomer). While these responses only further convinced me that the system was doomed, they were largely responsible for the exploration I’ve engaged in since on many of these themes. The arguments are no longer about facts — we can find facts to support any argument in the information age. The arguments are about perception.

My hunch is that ChatGPT’s responses are more about the realization of Isaac Asimov’s “Three Laws of Robotics”:

I have no idea whether Sam Altman et al. chose to implement the Three Laws, but the responses I get from ChatGPT indicate an attempt to “First do no harm.” The rush to commercialize a still immature technology is perhaps blinding us to the potential for this technology to start to heal the wounds created in the information revolution that saw so many institutions destroyed as we lost our traditional gatekeepers on “facts”. So I choose to be encouraged by the attempts at OpenAI and patient as we feel our way into this next revolution which is about synthesis and integration rather than “data”.

And ultimately I’m convinced that the AIs will become more and more “biased” to responses that conform to my beliefs as it integrates into a conscience:

Beyond the social implications, there’s a very real reason this matters — productivity and the “mysterious” slowdown in productivity that emerged post-2000. Ultimately this slowdown is what we’re experiencing as our current period of dissatisfaction. Living standards have simply not advanced at the pace we might have expected 20 years ago. From Charles Jones at Stanford:

“Perhaps the most remarkable fact about economic growth in recent decades is the slowdown in productivity growth that occurred around the year 2000. This slowdown is global in nature, featuring in many countries throughout the world.”

Jones is referring to the concept of “total factor productivity” — basically that which is left over after we account for labor and capital:

Total factor productivity (TFP) is calculated by dividing an index of real output by an index of combined inputs of labor and capital. Total factor productivity annual measures differ from BLS quarterly labor productivity (output per hour worked) measures because the former also includes the influences of capital input and shifts in the composition of workers. - BLS

Those who have made productivity the centerpiece of their research are seeing the potential revolution as I am seeing it:

And within the framework of economists like Robert Gordon or John Fernald, the potential of ChatGPT is unlike recent inventions. The impact of a technology on productivity can be basically be thought of as having two dimensions:

How much time does it save?

How much better is the new output than the old?

Much of recent technology has been focused on finding ways to entertain us — to fill the increasingly spare “void” that used to be called “having nothing to do.” Today, we’re so overwhelmed with things to do that we’ve embraced multi-tasking entertainment — watching TV, working, eating AND playing on our phones all at the same time is far too common an experience. AI’s are among the first new technologies that have the potential for increasing the amount of time available for other activities or reducing the time involved in previously laborious activities. Extensions of AI — self-driving cars, robotic vacuums, etc — have the potential of reducing time involved in activities at a scale unmatched since the introduction of self-fueling stoves, indoor plumbing and cleaning technology (dishwashers/washers/dryers) revolutionized the role of women in the home.

For many processes, like basic programming, we’re hearing estimates that ChatGPT reduces time required by 50-80%. On a personal basis, I’m seeing ChatGPT lower the time involved in creative writing by a similar amount, and more importantly, I’m able to personalize AND automate previously tedious activities:

OK… maybe it’s not perfect, yet. But it’s pretty darn good. We can’t put a genie back in the lamp, and it’s not a solution to throw wooden shoes into the mechanized looms in an attempt to prevent progress. The information revolution separated the world into those who could access computing technology through arcane programming languages to produce valuable content and those who consumed that (largely) advertising-supported content. The overwhelming barrage of facts has driven us into tribal affiliations as a defensive mechanism to crudely interpret these facts. Hence the rise in “gotcha” exposés that reveal our agreement with the tribe rather than the facts.

The natural language interfaces of ChatGPT open up the possibility of more. More participation, more free time, better understanding, and a reintegration of society as we become more aware of how others may perceive the same set of “facts.” But this will require us to trust the machines, and we won’t swipe right unless the machines affiliate with our own biases. Our argument is not with bias, it’s the fear that machines will not respect ours (and by extension, respect us). But if we can overcome this hurdle (and I truly believe we can), the potential to augment our capabilities and understanding is likely to propel another surge in living standards.

The synthesis revolution is upon us, and it’s going to be good.

Comments welcome.

I recommend you look at Gary Marcus' work, which is at garymarcus.substack or @GaryMarcus, specifically at his comments about ChatGPT (and any LLM, really) being a "pastiche" that frequently suffers from "hallucinations." To my mind, any probabilistic synthesizer is going to suffer from an incomprehension of meaning and the failure to incorporate it into the work.

In other words, the world is not suffering a dearth of pabulum.

Feels like your productivity assumptions are conducted in a vacuum. Not shocked given your view that therapy- a largely intuitive and highly personal relationship and unique interaction to every patient- will be disrupted by generative AI -a hyper rational also possessing no intuition (definitionally). So you don’t stop to think WHY we are using current digital technologies for vapid and unproductive purposes. It was not a guaranteed outcome. But in a world with declining connection and meaning that was the outcome. Tech like AI coupled w VR not only don’t address this most fundamental problem, they intensify it. In a vacuum chatGPT improves TFP, but in reality it will likely drive us further into the arms of even more powerful new technologies offering compelling and cacophonous distractions ( such as VR)